Artificial intelligence tools can now produce highly realistic text, images, and videos at almost no cost, prompting concerns that the proliferation of fabricated content may mean mainstream news outlets lose both the trust and demand of their audience. This column reports the results of a field experiment in partnership with one of Germany’s most respected news outlets, which suggest that when the threat of misinformation becomes salient, the value of credible news increases. Media outlets must continually invest in helping readers distinguish fact from fabrication, keeping pace with the rapid evolution of AI.

Concerns about misinformation have intensified in recent years. Scholars have shown how false or misleading news can distort political debate, polarise societies, and weaken democratic institutions (Allcott and Gentzkow 2017, Lazer et al. 2018). The fear is not only that citizens are exposed to inaccurate claims, but that the very idea of shared truth is eroded, leaving individuals distrustful of all information sources.

The rise of generative artificial intelligence has given these concerns a new urgency. Tools such as ChatGPT, Midjourney, and Sora can now produce highly realistic text, images, and videos at almost no cost. This has raised alarm among policymakers: the EU is negotiating its AI Act, and civil society groups are pressing for stronger accountability from digital platforms.

The natural concern is that news organisations could be the hardest hit. If fabricated content proliferates and deepfakes circulate widely, in the extreme, readers may give up on trying to distinguish fact from fiction. Mainstream news outlets, already under pressure from declining revenues (Djourelova et al. 2025), risk losing both the trust and demand of their audience.

Our recent research (Campante et al. 2025) suggests a more nuanced perspective. While exposure to AI-generated misinformation does make people more worried about the quality of information available online, it can also increase the value they attach to outlets with reputations for credibility, as the need for help in distinguishing between real and synthetic content becomes more pressing. In this new study, we examine whether AI misinformation inevitably undermines demand for mainstream news content or whether it might instead boost it, as the value of credibility increases with its relative scarcity. To shed light on this question, we partnered with Süddeutsche Zeitung (SZ), one of Germany’s most respected news outlets, to run a field experiment with real readers.

The experiment: Design and outcomes

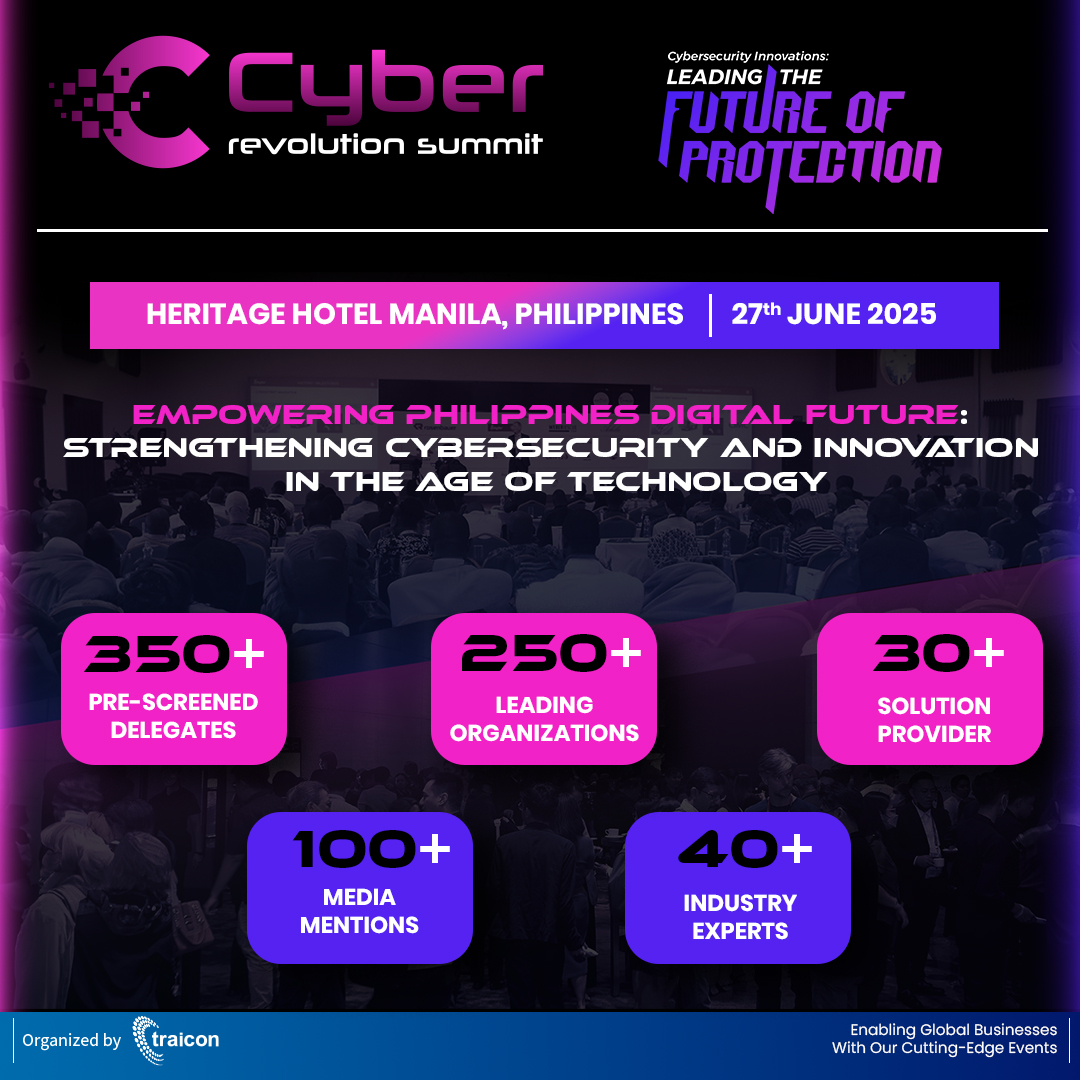

The experiment involved thousands of SZ readers, who were randomly divided into two groups. Those in the treatment group were invited to take a short quiz asking them to distinguish between real images and AI-generated ones. For illustration, we report one of the questions in Figure 1. The control group was shown similar authentic pictures and were asked different questions about their content.

Figure 1 Example question from AI quiz

We then tracked a range of outcomes. First, we collected attitudinal measures from a survey following the quiz, such as reported concern about misinformation and trust in news. Second, for a subset of subscribers, we observed behavioural measures of readership: how many times they visited SZ digital platforms in the weeks before and after the quiz. Third, we followed retention rates, examining whether those tracked subscribers stayed with SZ in the months after the intervention.

This design allowed us to capture both immediate shifts in perception and longer-run changes in behaviour.

Main findings

The results are striking and economically meaningful. The AI quiz was effective in highlighting the difficulty of distinguishing between real and AI-generated images. Readers exposed to the AI quiz reported higher levels of concern about the reliability of online information, as well as reduced trust in the content of various media outlets and platforms, compared to those assigned to the control group. Notably, SZ itself suffered that reduction in trust, as shown in Figure 2.

Figure 2 Effect of AI treatment on trust (standard deviation units)

Far from disengaging, however, they consumed more SZ content: the number of visits to the SZ website and app increased by 2.5% in the first 3-5 days after the intervention. Moreover, as documented in Figure 3, treated individuals were 1.1% more likely to maintain their subscription months later compared to those in the control group, a reduction of about one-third in the baseline attrition rate.

Figure 3 Cumulative dropped subscriptions by group

These findings, consistent with a simple theoretical model in the study, suggest that when the threat of misinformation becomes salient, the value of credible news increases. The prospect of AI-generated falsehoods did not drive readers away from news content; instead, it reinforced their reliance on a trusted outlet that could help mitigate the effects of the broad decline in the quality of the information environment.

The average effects conceal significant differences across readers. The treatment had a particularly strong impact on those who initially expressed lower levels of engagement with online political information. For these readers, awareness of AI’s ability to fabricate content made the reporting of SZ more attractive. Similarly, readers who found the quiz hard and were in the AI treatment group saw significant increases in online engagement with the SZ website relative to those in the control group.

Implications for the media industry and society

Our findings carry important implications for both business and society. For the news industry, they point to a potential strategy for navigating the disruptions caused by AI-generated content. If outlets can establish and maintain trust with their readers, the rise of synthetic content becomes not just a threat but also an opportunity. As trust grows scarcer, its value rises, and audiences may be more willing to pay for reliable, trustworthy journalism. The open question, and one for future research, is how news organisations can best build and sustain this trust in an environment where misinformation is increasingly sophisticated and pervasive.

At the societal level, the results suggest that the proliferation of AI-powered misinformation does not inevitably lead to a collapse of trust in information overall. The fear that cheap, convincing fake content will displace authentic reporting overlooks a countervailing force: when misinformation spreads, the payoff to credibility increases. However, our framework also highlights that sustaining this advantage is far from easy. The threshold for trustworthiness rises with the volume and sophistication of misinformation, meaning that media outlets cannot stand still but must continually invest in helping readers distinguish fact from fabrication, keeping pace with the rapid evolution of AI. As generative technologies advance at breakneck speed, this dynamic will only grow more central to the future of journalism and the health of the information environment.

Source : VOXeu